Samsung researchers develop a tiny AI model 'Tiny Recursion Model (TRM)' that can beat Gemini 2.5 Pro with just 7 million parameters

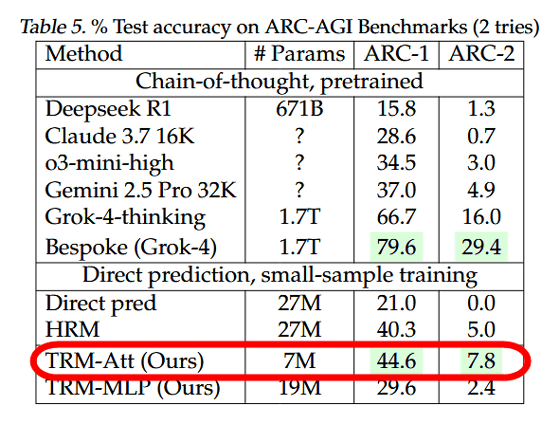

Samsung AI researcher Alexia Jolicoeur-Martineau has developed a small, high-performance AI model called the Tiny Recursion Model (TRM) . Despite its small size (only 7 million parameters), the TRM achieves benchmark scores exceeding those of the Gemini 2.5 Pro.

[2510.04871] Less is More: Recursive Reasoning with Tiny Networks

Less is More: Recursive Reasoning with Tiny Networks

https://alexiajm.github.io/2025/09/29/tiny_recursive_models.html

New paper 📜: Tiny Recursion Model (TRM) is a recursive reasoning approach with a tiny 7M parameters neural network that obtains 45% on ARC-AGI-1 and 8% on ARC-AGI-2, beating most LLMs.

— Alexia Jolicoeur-Martineau (@jm_alexia) October 7, 2025

Blog: https://t.co/w5ZDsHDDPE

Code: https://t.co/7UgKuD9Yll

Paper: https://t.co/3m8ANhNMiw

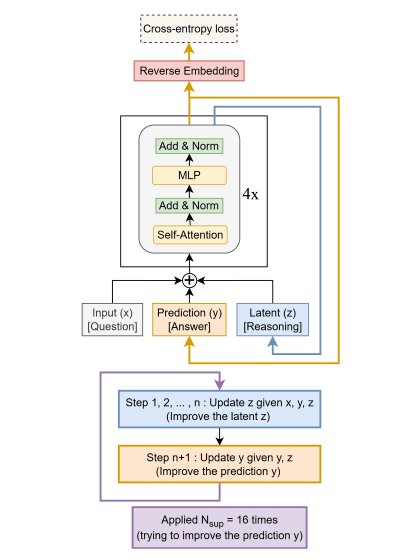

TRM was developed based on the Hierarchical Reasoning Model (HRM) , which was published in June 2025. HRM uses a method called 'hierarchical reasoning' that mimics the structure of the human brain. However, Jolicoeur-Martineau developed a method called 'recursive reasoning' that significantly simplified hierarchical reasoning by removing parts of the model that were too dependent on the biological structure of the human brain. He then trained TRM as a model that uses recursive reasoning.

Below are the results of the benchmarks

TRM also features low learning costs, with training possible in two days using four H100s. The cost required for training is less than $500 (approximately 76,000 yen).

< 500$, 4 H-100 for around 2days

— Alexia Jolicoeur-Martineau (@jm_alexia) October 7, 2025

The code used to train TRM is available at the following link:

GitHub - SamsungSAILMontreal/TinyRecursiveModels

https://github.com/SamsungSAILMontreal/TinyRecursiveModels

'The idea that you need to rely on large-scale models trained by large companies at millions of dollars to succeed at difficult tasks is a trap,' Jolicoeur-Martineau said. 'Right now, there's too much emphasis on leveraging LLMs and not enough on exploring new directions for them.'

Related Posts: