Introducing 'ELYZA-LLM-Diffusion,' a diffusion language model that can generate Japanese language quickly

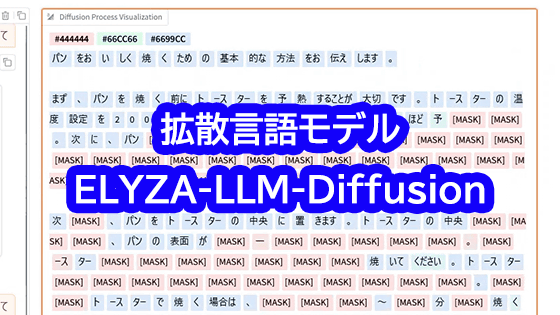

ELYZA, an AI development company established by the Matsuo Laboratory at the University of Tokyo, released a Japanese-specific diffusion language model, ' ELYZA-LLM-Diffusion ,' on January 16, 2026. Instead of the autoregressive model that is mainstream in existing language models, it uses a diffusion model developed for image generation AI, and is characterized by high-speed generation with low computational costs.

Release of Japanese-specific diffusion language model 'ELYZA-LLM-Diffusion'

ELYZA Develops 'ELYZA-LLM-Diffusion,' a Japanese Diffusion Language Model for Fast Sentence Generation, and Releases It in a Commercially Usable Format | ELYZA Inc.

https://prtimes.jp/main/html/rd/p/000000066.000047565.html

【notice】

— ELYZA, Inc. (@ELYZA_inc) January 16, 2026

ELYZA has developed a Japanese diffusion language model called 'ELYZA-LLM-Diffusion' that enables high-speed sentence generation, and has released it in a commercially available format.

In addition, to coincide with the release of the model, we have also released a chatUI demo on the Hugging Face hub, so please give it a try.

■Model https://t.co/6R4uKg8y8F … pic.twitter.com/ZjAJnkdV3e

Existing language models use a mechanism called an autoregressive model, which generates text sequentially from the beginning. On the other hand, ELYZA-LLM-Diffusion uses a diffusion model, which is used in image generation models. Diffusion models work by generating images and text from noise, and are said to be faster than autoregressive models.

Two versions of ELYZA-LLM-Diffusion are available: 'ELYZA-Diffusion-Base-1.0-Dream-7B,' which is based on the diffusion language model ' Dream 7B ' and has undergone additional training using a Japanese corpus of 62 billion tokens; and 'ELYZA-Diffusion-Instruct-1.0-Dream-7B,' which has undergone instruction tuning using data of 180 million tokens.

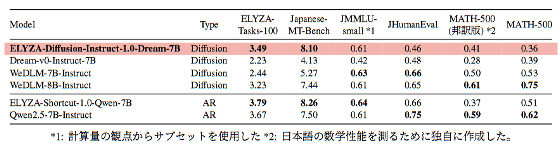

The table below compares the Japanese processing performance of ELYZA-Diffusion-Instruct-1.0-Dream-7B with the diffusion language models 'Dream-v0-Instruct-7B', 'WeDLM-7B-Instruct', and 'WeDLM-8B-Instruct' and the autoregressive language models 'ELYZA-Shortcut-1.0-Qwen-7B' and 'Qwen2.5-7B-Instruct'. ELYZA-Diffusion-Instruct-1.0-Dream-7B showed higher performance than other diffusion language models, but it does not reach the autoregressive language model.

Demo of 'ELYZA-LLM-Diffusion', a diffusion language model that can generate Japanese at high speed - YouTube

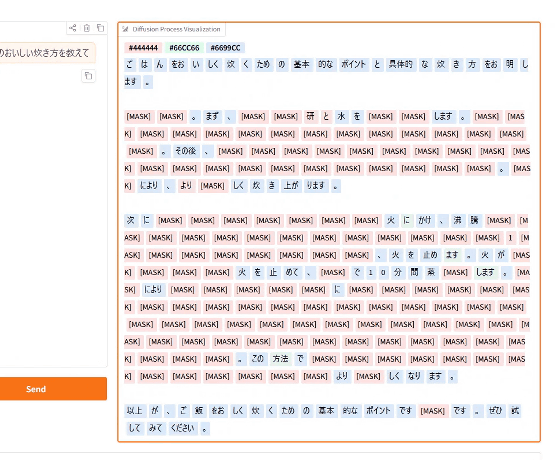

Below is a screenshot of the text generation process. While an autoregressive language model generates text sequentially from the beginning, ELYZA-Diffusion-Instruct-1.0-Dream-7B gradually updates the entire text as it goes along.

ELYZA claims that ELYZA-LLM-Diffusion has achieved 'a practical level of performance for diffusion language models.' At the time of writing, the accuracy of the generative process is still lower than that of autoregressive language models, but future research and development is expected to make diffusion models the new standard for language models.

The model data for ELYZA-Diffusion-Base-1.0-Dream-7B and ELYZA-Diffusion-Instruct-1.0-Dream-7B are available at the following links. The license is Apache license 2.0, which allows commercial use.

elyza/ELYZA-Diffusion-Base-1.0-Dream-7B · Hugging Face

https://huggingface.co/elyza/ELYZA-Diffusion-Base-1.0-Dream-7B

elyza/ELYZA-Diffusion-Instruct-1.0-Dream-7B · Hugging Face

https://huggingface.co/elyza/ELYZA-Diffusion-Instruct-1.0-Dream-7B

Related Posts:

in AI, Posted by log1o_hf