Developer who uploaded AI training data to Google Drive has his Google account suspended for storing child sexual abuse content

A smartphone app developer uploaded AI training data to Google Drive, resulting in their Google account being

Developer Accidentally Found CSAM in AI Data. Google Banned Him For It

https://www.404media.co/a-developer-accidentally-found-csam-in-ai-data-google-banned-him-for-it/

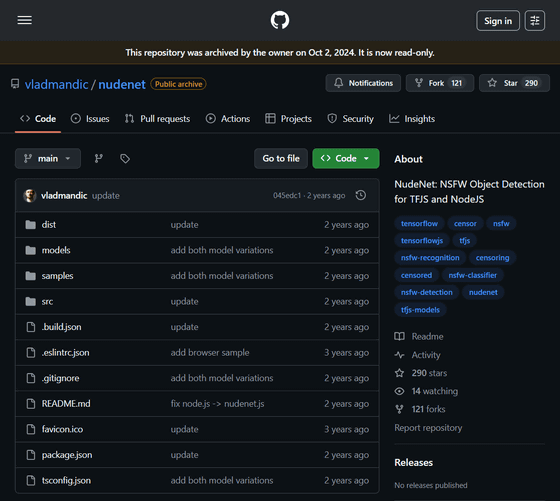

The data in question is 'NudeNet,' a dataset for NSFW detection tools. NudeNet was created by winchester6788 , who collected data from Reddit and other sources and published it on GitHub, and is used by various researchers and developers.

GitHub - vladmandic/nudenet: NudeNet: NSFW Object Detection for TFJS and NodeJS

https://github.com/vladmandic/nudenet

The person who had his Google account banned was NudeNet user markatlarge , who downloaded the NudeNet dataset and saved it to his Google Drive to benchmark the NSFW content detection app ' punge .'

He then received a message from Google stating, 'Your account contains child sexual abuse content (CSAM) which is a serious violation of Google policy and may be illegal.' As a result, he was banned from his Google account and lost access to Gmail, which he had used for 14 years, Firebase, which serves as the backend for his apps, and AdMob, a mobile app monetization platform.

Markatlarge filed a complaint with Google, explaining that the images he uploaded were taken from NudeNet, which he believed to be a 'trusted research dataset that can detect only adult content.'

Although Google accepted the appeal, the account suspension remained in place, meaning markatlarge continued to be unable to access Google services. So, he filed another appeal, but it appears to have been rejected.

After his account was deleted, markatlarge notified

The Canadian Child Protection Centre, which was contacted and conducted its own investigation, found that NudeNet contained 'more than 120 images of identified or known CSAM victims,' 'nearly 70 images focusing on the genitals and anus of prepubescent children,' and 'images depicting teenagers engaging in sexual acts and sexual abuse.'

In response to this, NudeNet's creator, winchester6788, said , 'It's basically impossible to check or remove CSAM from a huge number of images.'

Meanwhile, markatlarge, whose Google account was disabled after using NudeNet, wrote, 'The problem is that independent developers have no access to the tools necessary for CSAM detection, while major tech companies have a monopoly on those capabilities. Giants like Google are openly using datasets like LAION-5B, which also contain CSAM, with no accountability. Google even uses early versions of LAION to train its own AI models. Despite this, no one is banning Google. However, when I used NudeNet for legitimate testing, Google deleted over 130,000 files from my account. In fact, out of approximately 700,000 images, only about 700 were problematic. This is not safe. The detection system is overworked without independent oversight or accountability .' He complained about the current situation in which independent developers cannot access the datasets necessary for CSAM detection.

404 Media reported that the incident was 'the latest example of how AI training data scraped indiscriminately from the internet can affect people who are unaware that it contains illegal images,' and noted that it also illustrates how difficult it is to identify harmful images from training data consisting of millions of images.

When 404 Media contacted Google for comment, they said they would investigate the matter again and eventually restored markatlarge's Google account.

A Google spokesperson told 404 Media, 'We are committed to preventing the spread of CSAM and have strong protections in place against the spread of this type of content. In this case, we detected CSAM on a user's account, but our review should have determined that the user's upload was not malicious. We have restored the account in question and are committed to continuously improving our processes.'

Related Posts:

in AI, Posted by logu_ii