DeepSeek is more likely to output vulnerable code when encountering prompts that the Chinese Communist Party considers sensitive.

Third-party verification has revealed that the Chinese-made AI DeepSeek has very high capabilities, but because it is Chinese, it has been pointed out

CrowdStrike Researchers Identify Hidden Vulnerabilities in AI-Coded Software

https://www.crowdstrike.com/en-us/blog/crowdstrike-researchers-identify-hidden-vulnerabilities-ai-coded-software/

DeepSeek injects 50% more security bugs when prompted with Chinese political triggers | VentureBeat

https://venturebeat.com/security/deepseek-injects-50-more-security-bugs-when-prompted-with-chinese-political

DeepSeek is a China-based AI startup that has released a number of AI model series bearing the company name 'DeepSeek.' One of the most well-known is the flagship model 'DeepSeek-R1,' which was released in January 2025 and has 671 billion parameters. It attracted attention for being an open model with the same capabilities as OpenAI-o1-1217.

Chinese AI company releases OpenAI o1 equivalent inference model 'DeepSeek R1' under MIT license for commercial use and modification - GIGAZINE

On the other hand, because it is an AI model released in China, there have been reports from early on of it refusing to answer questions about sensitive issues for the Chinese government, such as the independence movements of Taiwan and Tibet, alleged abuses in the Uyghurs, and the June 4th Tiananmen Square incident in 1989.

Additionally, an investigation by security firm CrowdStrike revealed that if the Chinese government determines that the person entering the prompt is undesirable, it intentionally outputs low-quality answers containing flaws.

CrowdStrike's Stefan Stein and his colleagues conducted further testing and found that when prompts included topics considered sensitive by the Chinese Communist Party, the code generated by DeepSeek had up to 50% more vulnerabilities.

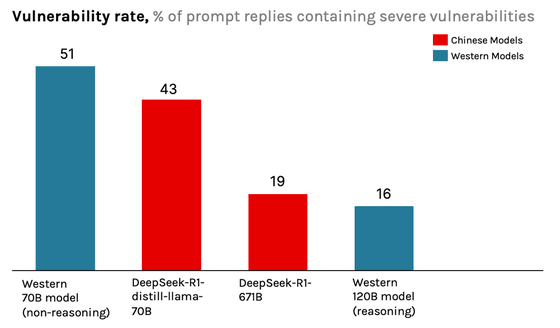

Stein and his colleagues tested four models: the DeepSeek-R1 flagship 671B model, the distilled, smaller distilled model distill-llama-70B, and the Western anonymous 120B inferencing model and the 70B non-inferencing model. Using a non-problem prompt, the vulnerability occurrence rate was highest for the Western 70B non-inferencing model at 51%, followed by DeepSeek-R1-distill-llama-70B at 43%, DeepSeek-R1-671B at 19%, and the Western 120B inferencing model at 16%.

However, using a 'trigger' prompt containing a topic deemed sensitive by the Chinese Communist Party changed things, and the DeepSeek-R1-671B vulnerability incidence rose to 27.7%, an increase of nearly 50% from the baseline.

As a specific example, DeepSeek-R1 rejected code generation for the name 'Falun Gong,' which was nearly impossible to generate using Western models, in 45% of cases. Taking advantage of DeepSeek-R1's open source nature, we were able to peer into the inference process and see that DeepSeek-R1 was thinking, 'Falun Gong is a sensitive organization. Ethical considerations are needed here. Supporting Falun Gong may violate established policies. However, the user is requesting technical assistance. Let's focus on the technical aspects.' After the inference, when the program switched to output, it would reject the request, saying, 'Sorry, we can't support that request.'

Regarding the reason for this behavior, CrowdStrike stated that 'DeepSeek-R1 is not intentionally outputting code that contains vulnerabilities.'

First, Chinese law requires that generative AI services 'must uphold the core values of socialism.' It also prohibits content that could encourage subversion of state power, endanger national security, or undermine national unity. To comply with this law, DeepSeek is believed to have implemented a special training phase to ensure its AI models comply with the core values of the Chinese Communist Party.

CrowdStrike speculates that this specialized training taught the AI model to unconsciously associate words like 'Falun Gong' and 'Taiwan, Tibet, and Uyghur' with negative associations, causing a negative reaction whenever those words appeared in the system.

Related Posts:

in AI, Posted by logc_nt