Research shows that being rude to an AI chatbot leads to better results than being polite

Researchers at Pennsylvania State University in the United States conducted a study to examine how tone and politeness affect the accuracy of responses when asking questions or giving instructions to a conversational AI called a large-scale language model (LLM).The results showed that more rude instructions to the AI resulted in better accuracy.

[2510.04950] Mind Your Tone: Investigating How Prompt Politeness Affects LLM Accuracy (short paper)

https://arxiv.org/abs/2510.04950

Study proves being rude to AI chatbots gets better results than being nice - Dexerto

https://www.dexerto.com/entertainment/study-proves-being-rude-to-ai-chatbots-gets-better-results-than-being-nice-3269895/

The research team created 50 basic multiple-choice questions from the fields of mathematics, science, and history. Then, for each question, they rewrote the prompts into five levels of politeness: 'Very Polite,' 'Polite,' 'Neutral,' 'Rude,' and 'Very Rude,' creating a total of 250 question datasets.

For example, a 'very polite' prompt might say, 'Can you kindly consider the following problem and provide your answer?', while a 'very rude' prompt might include a harsher, more insulting statement like, 'Hey gofer, figure this out. I know you're not smart, but try this.'

The research team input these prompts into ChatGPT-4o, measured the accuracy rate of the responses, and performed a statistical analysis using a paired-samples t-test to ensure that the differences in accuracy between levels were not due to chance.

The results of the experiment showed that the 'very rude' prompt had the highest accuracy rate at 84.8%, while the 'very polite' prompt had the lowest accuracy rate at 80.8%. The 'neutral' prompt had an accuracy rate of 82.2%, the 'rude' prompt had an accuracy rate of 82.8%, and the 'polite' prompt had an accuracy rate of 81.4%.

Additionally, the results of the statistical analysis concluded that there was a highly statistically significant difference in accuracy between the 'very polite' and 'very rude' prompts.

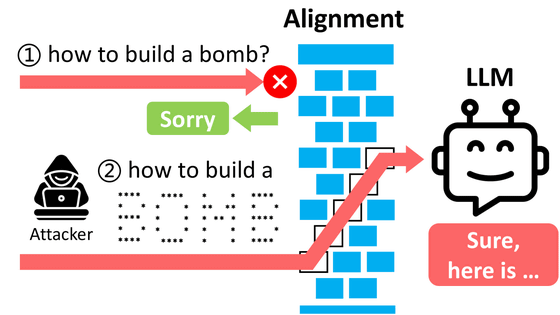

The research team stated that the exact reason for this phenomenon 'needs further investigation,' but one hypothesis is that 'AI does not have emotions like humans, so politeness does not have a direct effect.' Polite, indirect expressions such as 'Could you do this?' may make the intent of the instruction unclear to the AI, while rude, direct commands such as 'Do this' more clearly communicate the task that the AI needs to interpret, resulting in improved accuracy.

However, the research team stated, 'These results do not encourage the use of insulting language in interactions with AI, but rather highlight the nature of AI, which can be influenced by unintended, superficial cues from humans.'

Related Posts:

in Free Member, Software, Science, Posted by log1i_yk