The AI model 'MiniMax-M1', which can handle the world's longest context window of 1 million token inputs and 80,000 token outputs, was trained for only 78 million yen and is now available as open source and available for anyone to download.

Day 1/5 of #MiniMaxWeek : We're open-sourcing MiniMax-M1, our latest LLM — setting new standards in long-context reasoning.

— MiniMax (official) (@MiniMax__AI) June 16, 2025

- World's longest context window: 1M-token input, 80k-token output

- State-of-the-art agentic use among open-source models

- RL at unmatched efficiency:… pic.twitter.com/bGfDlZA54n

GitHub - MiniMax-AI/MiniMax-M1: MiniMax-M1, the world's first open-weight, large-scale hybrid-attention reasoning model.

https://github.com/MiniMax-AI/MiniMax-M1

MiniMax-M1 - a MiniMaxAI Collection

https://huggingface.co/collections/MiniMaxAI/minimax-m1-68502ad9634ec0eeac8cf094

MiniMax touts MiniMax-M1 as 'the world's first open-weight, large-scale hybrid attention inference model.' MiniMax-M1 combines a hybrid Mixture-of-Experts (MoE) architecture with the Lightning Attention mechanism.

Developed based on MiniMax-Text-01 , MiniMax-M1 contains a total of 456 billion parameters, with 45.9 billion parameters active per token. Like MiniMax-Text-01, MiniMax-M1 natively supports a context window of 1 million tokens, eight times larger than DeepSeek R1 .

MiniMax-M1 employs a Lightning Attention mechanism, enabling efficient scaling of test computations. For example, with a context window of 100,000 tokens, MiniMax-M1 consumes only 25% fewer FLOPs than DeepSeek R1. This characteristic makes MiniMax-M1 particularly well-suited for complex tasks requiring long context windows and extensive thinking.

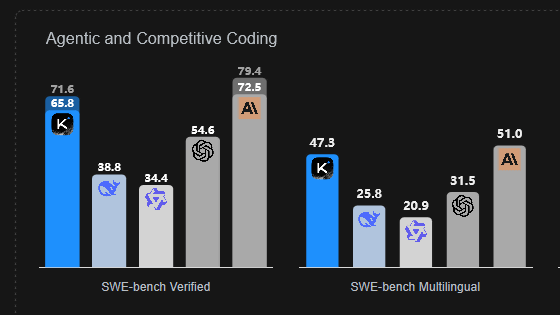

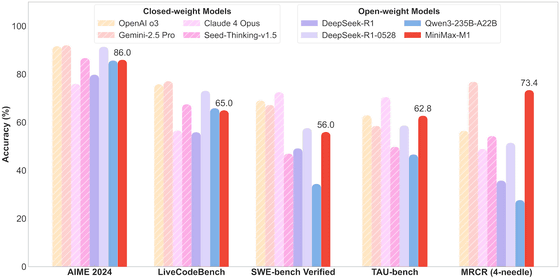

The graph below compares MiniMax-M1's performance against leading commercial AI models in competitive mathematics, coding, software engineering, agent tool use, and text comprehension tasks. The red bar shows MiniMax-M1, which performs comparable to competing AI models in all tasks.

Examples of how to use MiniMax-M1 are as follows:

Simply drop a prompt to instantly generate an HTML page with a canvas-based animated particle background.

1️⃣ UI Components Spotlight

— MiniMax (official) (@MiniMax__AI) June 16, 2025

Just drop in a prompt — it instantly builds an HTML page with a canvas-based animated particle background pic.twitter.com/AMVn5BZDKr

'We asked the MiniMax-M1 to create a typing speed test for us, and it produced a clean and functional web app that tracks WPM in real time.

No plugins, no setup required. Just practical, production-ready AI. 👇'

2️⃣Interactive Apps

— MiniMax (official) (@MiniMax__AI) June 16, 2025

Asked MiniMax-M1 to build a typing speed test — it generated a clean, functional web app that tracks WPM in real time.

No plugins, no setup — just practical, production-ready AI. 👇 pic.twitter.com/QrNbBp3lo0

The following HTML page was generated with the prompt, 'Create an HTML page with a canvas-based animated particle background. The particles should move smoothly and connect as they approach each other. Add heading text to the center of the canvas.' It was generated using HTML canvas elements and JavaScript.

3️⃣ Visualizations

— MiniMax (official) (@MiniMax__AI) June 16, 2025

Prompt: Create an HTML page with a canvas-based animated particle background. The particles should move smoothly and connect when close. Add a central heading text over the canvas

Canvas+JS, and the visuals slap.👇 pic.twitter.com/48ZHLHel4j

This is a maze generator and pathfinding visualization tool generated by entering the following: 'Create a maze generator and pathfinding visualization tool. Randomly generate a maze and visualize how an algorithm solves it step by step. Use canvas and animation to make it visually appealing.'

4️⃣ Game

— MiniMax (official) (@MiniMax__AI) June 16, 2025

Prompt: Create a maze generator and pathfinding visualizer. Randomly generate a maze and visualize A* algorithm solving it step by step. Use canvas and animations. Make it visually appealing. pic.twitter.com/VSeVndWemd

AI instructor Min Choi has also shared examples of using the MiniMax-M1, such as a Netflix clone app that can play trailers.

This is wild.

— Min Choi (@minchoi) June 16, 2025

MiniMax-M1 just dropped.

This AI agent = Manus + Deep Research + Computer Use + Lovable in one.

1M token memory, open weights🤯

10 wild examples + prompts & demo:

1. Netflix clone with playable trailers pic.twitter.com/mfpzrCwlot

AI strategist David Hendrickson reports, 'We compared the newly released MiiniMax-M1 80B with Claude Opus 4. The MiiniMax-M1 outperformed the Claude Opus 4 in several benchmarks, particularly the longer context-driven benchmarks.'

Compare the newly released MiniMax-M1 80B against Claude Opus 4. MiniMax outperforms Claude Opus 4 in some benchmarks, especially in long-context driven benchmarks. https://t.co/XIiEovh7wB pic.twitter.com/0PgPi7bk3E

— David Hendrickson (@TeksEdge) June 16, 2025

MiniMax is part of a group of AI startups backed by internet giants Tencent and Alibaba, which have raised billions of dollars in funding over the past year, but DeepSeek's success has forced most of them to scale back on basic research and focus on applied research.

However, the announcement of the MiiniMax-M1 suggests that MiniMax has found a different path, according to AI media implicator.ai . Rather than retreating from model development, MiiniMax may have found its own path by focusing on efficiency and open access.

The training budget for the MiiniMax-M1 was only $534,700 (approximately 78 million yen), suggesting that cutting-edge AI models no longer require vast corporate resources.

Related Posts: