Research results show that Google's medical interview-specialized AI 'AMIE' can make more accurate diagnoses than humans and leaves a better impression on patients

When a patient becomes ill or injured,

[2401.05654] Towards Conversational Diagnostic AI

https://arxiv.org/abs/2401.05654

AMIE: A research AI system for diagnostic medical reasoning and conversations – Google Research Blog

https://blog.research.google/2024/01/amie-research-ai-system-for-diagnostic_12.html

Google AI has better bedside manner than human doctors — and makes better diagnoses

In medical interviews, by properly communicating with the patient, it is possible not only to understand the patient's medical history and decide on appropriate treatment, but also to provide mental care for the patient by responding empathetically to their emotions.

However, while previous large-scale language models have been able to accurately perform tasks such as summarizing medical papers and answering medical questions, very little AI has been developed for the purpose of medical interviews.

AMIE, developed jointly by Google Research and Google DeepMind, is a conversational medical AI optimized for medical interviews. According to the research team, AMIE was trained from both the clinician and patient perspectives.

To address the lack of real-world medical conversations to use as training data, the research team developed a self-play-based simulated dialogue environment and equipped AMIE with automatic feedback. As a result, AMIE can handle a wide range of medical conditions, specialties, and scenarios. Through repeated dialogue and feedback, its responses are gradually refined, enabling it to provide accurate and well-founded answers to patients.

To develop AMIE, the team first fine-tuned a base large-scale language model using real-world datasets, such as electronic medical records and documented medical interviews. They then repeatedly trained the large-scale language model by instructing it on fictitious patients to understand their medical histories and make diagnoses.

The research team ultimately conducted an experiment in which 20 subjects participated in a medical interview via online chat without knowing whether they were being interviewed by AMIE or a human doctor. The subjects were asked to complete 149 interview scenarios and rate the interviews.

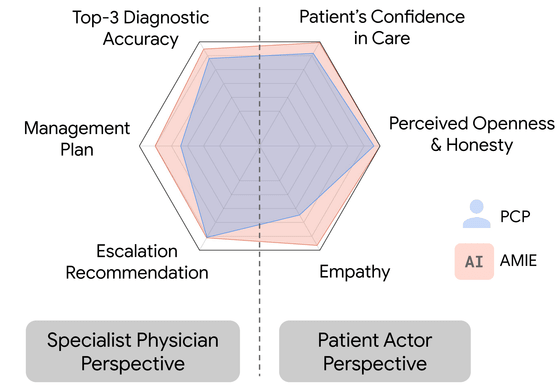

The results of the experiment showed that AMIE matched or exceeded the diagnostic accuracy of human doctors in all six medical specialties examined: diagnostic accuracy, trust in treatment, doctor sincerity, doctor empathy, accuracy of instructions, and patient health management.

In addition, AMIEs were reported to outperform doctors in 24 of 26 categories related to conversation quality, including 'politeness,' 'explanation of medical condition and treatment,' 'honesty,' and 'consideration for the patient.' The graph below shows the results. It is clear that AMIEs (red) outperform doctors (blue) in conversation quality across most criteria.

In addition, it was found that the use of AMIE by human doctors during medical interviews significantly improved diagnostic accuracy. The graph below shows diagnostic accuracy with and without AMIE. Compared to no support (blue), diagnostic accuracy significantly improved when using internet search (green) or AMIE (yellow). In this experiment, diagnostic accuracy was highest when AMIE conducted the medical interview (red).

On the other hand, research team member Alan Karthikesalingam pointed out, 'These results do not necessarily indicate that AMIE is superior to doctors. ' According to Karthikesalingam, the doctors who participated in this study were not accustomed to conducting medical interviews with patients through text-based chats, which may have led to poor performance.

Still, he says, AMIE 'has the advantage of being able to generate consistent answers quickly, and its tireless AI allows it to be consistently compassionate for every patient.'

As a next step, the research team cites 'investigating the ethical requirements for testing AMIE on actual patients with the disease.' Daniel Ting, a clinician and AI developer at Duke University School of Medicine, pointed out, 'Patients' privacy is also an important aspect to consider. The problem with current large-scale language models is that in many cases, it is not clear where the data is stored or how it is analyzed.'

Related Posts: