The CEO of the image generation AI 'Stable Diffusion' developer issues an open letter to the US Congress appealing to the importance of open source in AI

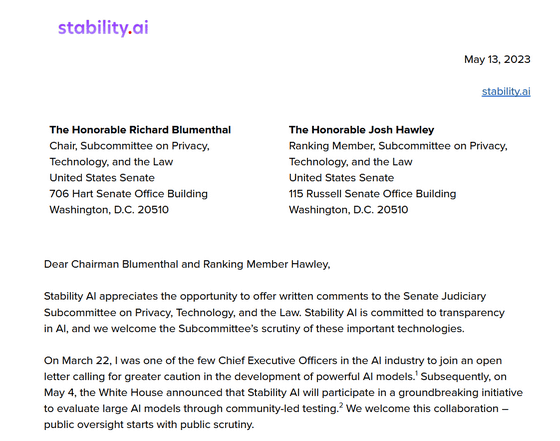

The U.S. Senate Judiciary Committee held a hearing on AI oversight and regulation on May 16, 2023, and called OpenAI CEO Sam Altman and others as witnesses. On the occasion of the hearing, Emad Mostark, CEO of Stability AI, the developer of the image generation AI 'Stable Diffusion,' published an open letter stressing the importance and significance of open source in AI.

Advocating for Open Models in AI Oversight: Stability AI's Letter to the United States Senate — Stability AI

https://ja.stability.ai/blog/stability-ai-letter-us-senate-ai-oversight

(PDF file) Stability AI - Dear Chairman Blumenthal and Ranking Member Hawley

Mostark advocated for the principles of Stability AI: transparency, accessibility, and human-centeredness. He argued that developing transparent, open models would enable researchers to verify performance and identify potential risks, and would allow public and private sectors to customize the models to suit their own needs.

'By developing an efficient model that's accessible to everyone, not just large corporations, including individual developers, small businesses, and independent creators, we can create a more equitable digital economy that isn't dependent on a handful of companies for critical technologies,' Mostark said. 'We need to build a model that supports the human user,' he said. Mostark argued that the goal isn't to seek godlike intelligence from AI, but to develop practical AI capabilities that can be applied to everyday tasks and improve creativity and productivity.

Mostark also stated that opening up the datasets used to train AI will ensure the security of AI and data, enable major companies and public institutions to develop their own custom models, and lower the barriers to entry, promoting innovation and free competition in AI.

Mostark acknowledges the risk that bias and errors in training data could cause AI to omit important context or use biased inputs, but warns that excessive regulation could stifle open innovation, which is essential for AI transparency, competitiveness, and national resilience.

Regarding the risks posed by AI, Mostark said, 'There is no silver bullet to address all risks,' and called on the Judiciary Committee to consider the following five proposals:

1. Larger models are at risk of being misused by malicious nation states or organizations. However, AI training and inference require significant computational resources, so cloud computing service providers should consider developing disclosure or audit policies to report on large-scale training and inference.

2. Strong models may require safeguards to prevent serious misuse, so operational and information security guidelines should be considered, and authorities should provide public oversight through open and independent evaluations.

3. Consider disclosure requirements for application developers, privacy obligations requiring user consent for data collection for AI training, and performance requirements for financial, medical, and legal AI.

4. Promote the adoption of content authenticity standards by providers of AI services and applications, and encourage 'intermediaries' such as social media platforms to adopt content moderation systems.

5. The U.S. government will work with the research and development community and industry to accelerate the development of evaluation frameworks for AI models. Policymakers will also invest in public computing resources and testbeds, and consider funding and procurement of publicly funded models that are managed as public resources, subject to public oversight, trained on trusted data, and available to organizations across the country.

Mostark concluded, 'Open models and open datasets will help improve safety through transparency, foster competition in essential AI services, and ensure America maintains strategic leadership in these critical technologies.'

Related Posts: