IBM open-sources its Granite AI code generation model, trained in 116 programming languages with 3-34 billion parameters

Software is embedded in every aspect of modern society and has contributed to dramatic improvements in productivity and the development of science and technology. However, creating, debugging, and deploying reliable software is a daunting task, and even experienced developers have a hard time keeping up with the latest technologies and languages. Therefore,

IBM's Granite code model family is going open source - IBM Research

https://research.ibm.com/blog/granite-code-models-open-source

GitHub - ibm-granite/granite-code-models: Granite Code Models: A Family of Open Foundation Models for Code Intelligence

https://github.com/ibm-granite/granite-code-models

IBM Releases Open-Source Granite Code Models, Outperforms Llama 3

https://analyticsindiamag.com/ibm-releases-open-source-granite-code-models-outperforms-llama-3/

IBM has been working on accelerating code development and deployment using the power of AI for some time, and has already developed the AI ' watsonx Code Assistant ' that converts COBOL to Java. IBM states, 'Large-scale language model-based agents are expected to handle complex tasks autonomously. To maximize the potential of large-scale language models that generate code, a wide range of functions are required, including code generation, bug fixing, code explanation and documentation, and repository maintenance.'

On May 7, 2024, IBM released its family of AI models, Granite, as open source code generation models. Granite's code generation models are trained in 116 programming languages and can perform a wide range of tasks, including generating, explaining, correcting, editing, and translating code.

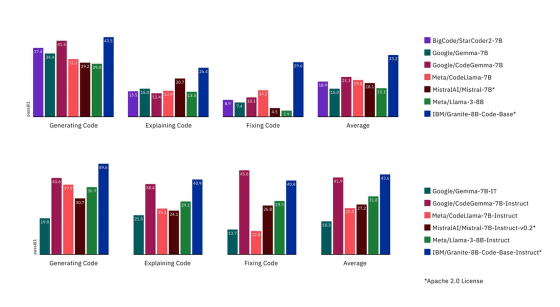

Granite's code generation models come in four types for each parameter size: 3B, 8B, 20B, and 34B. Each type has two variations: Base Models, which perform basic code generation tasks, and Instruct Models, which are fine-tuned using code instruction datasets. IBM says that these models have a variety of uses, from complex application modernization tasks to use cases where device memory is constrained.

The graph below compares the coding performance of Granite-8B-Code-Base (top, blue) and Granite-8B-Code-Base-Instruct (bottom, blue) with open source AI models of the same size released by Google, Meta, etc. In a performance evaluation based on three coding tasks using six programming languages, Granite's code generation model outperformed or was comparable to other models.

'Evaluated on a comprehensive set of tasks, these Granite code-generation models are shown to match the state-of-the-art among currently available open source code-generation models,' said IBM. 'This versatile family of models is optimized for enterprise software development workflows and demonstrates excellent performance across a range of coding tasks, including code generation, correction and explanation.'

In conjunction with this announcement, Red Hat, a cloud services company and subsidiary of IBM, announced a developer preview of Red Hat Enterprise Linux AI (RHEL AI), a foundational model platform for seamlessly developing, testing and running Granite generative AI models.

What is RHEL AI? A guide to the open source way for doing AI

https://www.redhat.com/en/blog/what-rhel-ai-guide-open-source-way-doing-ai

IBM Open-Source Granite AI Models, Launches InstructLab Platform | PCMag

https://www.pcmag.com/news/ibm-open-sources-granite-ai-models-launches-instructlab-platform

The combination of InstructLab , a new AI development platform, and RHEL AI will help developers overcome many of the barriers facing generative AI.

Related Posts:

in Software, Web Service, Posted by log1h_ik